15th Conference on Computer and Robot Vision 2018 - Deep Autoencoders with Aggregated Residual Transformations for Urban Reconstruction from Remote Se

Join us at the 15th Conference on Computer and Robot Vision 2018

ICT lab researcher Timothy Forbes will be presenting our work on “Deep Autoencoders with Aggregated Residual Transformations for Urban Reconstruction from Remote Sensing Data”. This work is co-authored with Timothy Forbes and Charalambos Poullis.

Abstract:

In this work we investigate urban reconstruction and propose a complete and automatic framework for reconstructing urban areas from remote sensing data.

Firstly, we address the complex problem of semantic labeling and propose a novel network architecture named SegNeXT which combines the strengths of deep-autoencoders with feed-forward links in generating smooth predictions and reducing the number of learning parameters, with the effectiveness which cardinality-enabled residual-based building blocks have shown in improving prediction accuracy and outperforming deeper/wider network architectures with a smaller number of learning parameters. The network is trained with benchmark datasets and the reported results show that it can provide at least similar and in some cases better classification than state-of-the-art.

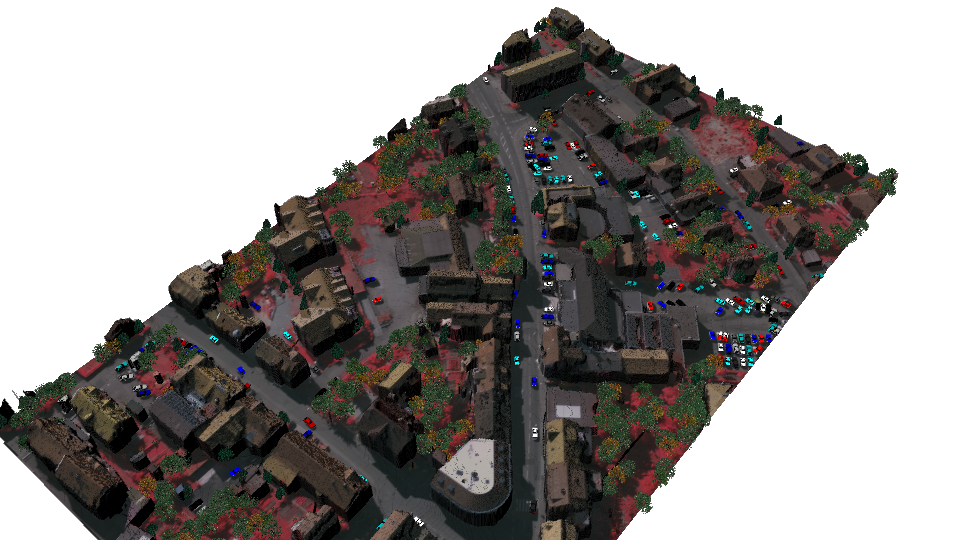

Secondly, we address the problem of urban reconstruction and propose a complete pipeline for automatically converting semantic labels into virtual representations of the urban areas. An agglomerative clustering is performed on the points according to their classification and results in a set of contiguous and disjoint clusters. Finally, each cluster is processed according to the class it belongs: tree clusters are substituted with procedural models, cars are replaced with simplified CAD models, buildings’ boundaries are extruded to form 3D models, and road, low vegetation, and clutter clusters are triangulated and simplified.

The result is a complete virtual representation of the urban area. The proposed framework has been extensively tested on large-scale benchmark datasets and the semantic labeling and reconstruction results are reported.